As a singer I've always been interested in the human voice and how it can be "trained". I had a friend who studied music performance and was taught about how the voice worked, i.e. which parts of the larynx were used to produce which sounds.

http://en.wikipedia.org/wiki/Human_voice

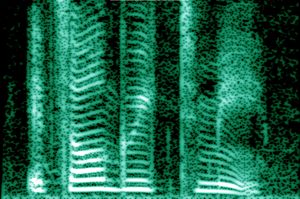

http://en.wikipedia.org/wiki/Spectrogram A piece of equipement that would be useful.

I would be keen to either emulate the human voice or create the "most satsifying" voice to human ears.

Articulatory synthesis

Articulatory synthesis refers to computational techniques for synthesizing speech based on models of the human vocal tract and the articulation processes occurring there. The first articulatory synthesizer regularly used for laboratory experiments was developed at Haskins Laboratories in the mid-1970s by Philip Rubin, Tom Baer, and Paul Mermelstein. This synthesizer, known as ASY, was based on vocal tract models developed at Bell Laboratories in the 1960s and 1970s by Paul Mermelstein, Cecil Coker, and colleagues.

Until recently, articulatory synthesis models have not been incorporated into commercial speech synthesis systems. A notable exception is the NeXT-based system originally developed and marketed by Trillium Sound Research, a spin-off company of the University of Calgary, where much of the original research was conducted. Following the demise of the various incarnations of NeXT (started by Steve Jobs in the late 1980s and merged with Apple Computer in 1997), the Trillium software was published under the GNU General Public License, with work continuing as gnuspeech. The system, first marketed in 1994, provides full articulatory-based text-to-speech conversion using a waveguide or transmission-line analog of the human oral and nasal tracts controlled by Carré's "distinctive region model".

http://en.wikipedia.org/wiki/Voice_synthesizer

http://www.haskins.yale.edu/facilities/asy.html

No comments:

Post a Comment